AMD AI Workbench Overview#

The AMD AI Workbench is an interface for developers to easily manage the lifecycle of their AI stack. The Workbench provides an easy-to-use low-code option for running and managing AI workloads. This article lays the foundation for how to develop, run, and manage AI workloads in the AMD AI Workbench.

AMD AI Workbench functionality#

The AMD AI Workbench includes the following capabilities:

AIM Catalog#

The AMD AI Workbench offers a comprehensive catalog of AMD Inference Microservices (AIMs), which are optimized inference containers that provide standardized, production-ready services for state-of-the-art large language models (LLMs) and other AI models. Each AIM includes built-in model caching and hardware optimization for AMD Instinct™ GPUs. Developers can easily discover, deploy, and fine-tune compatible models for their AI use cases.

AI workspaces#

The AMD AI Workbench provides developers with tools and frameworks and easy-access to GPU-resources in order to accelerate AI development and experimentation, featuring a comprehensive catalog of optimized AI workloads and models for AMD compute. The workloads include the most common developer tools and frameworks, such as Jupyter Notebooks, Visual Studio Code, and popular frameworks like PyTorch and TensorFlow.

Training & fine-tuning#

Fine-tuning a model allows developers to customize it for their specific use case and data. AMD AI Workbench provides a certified list of base models that developers can fine-tune, and allows customization of certain hyperparameters to achieve the best results.

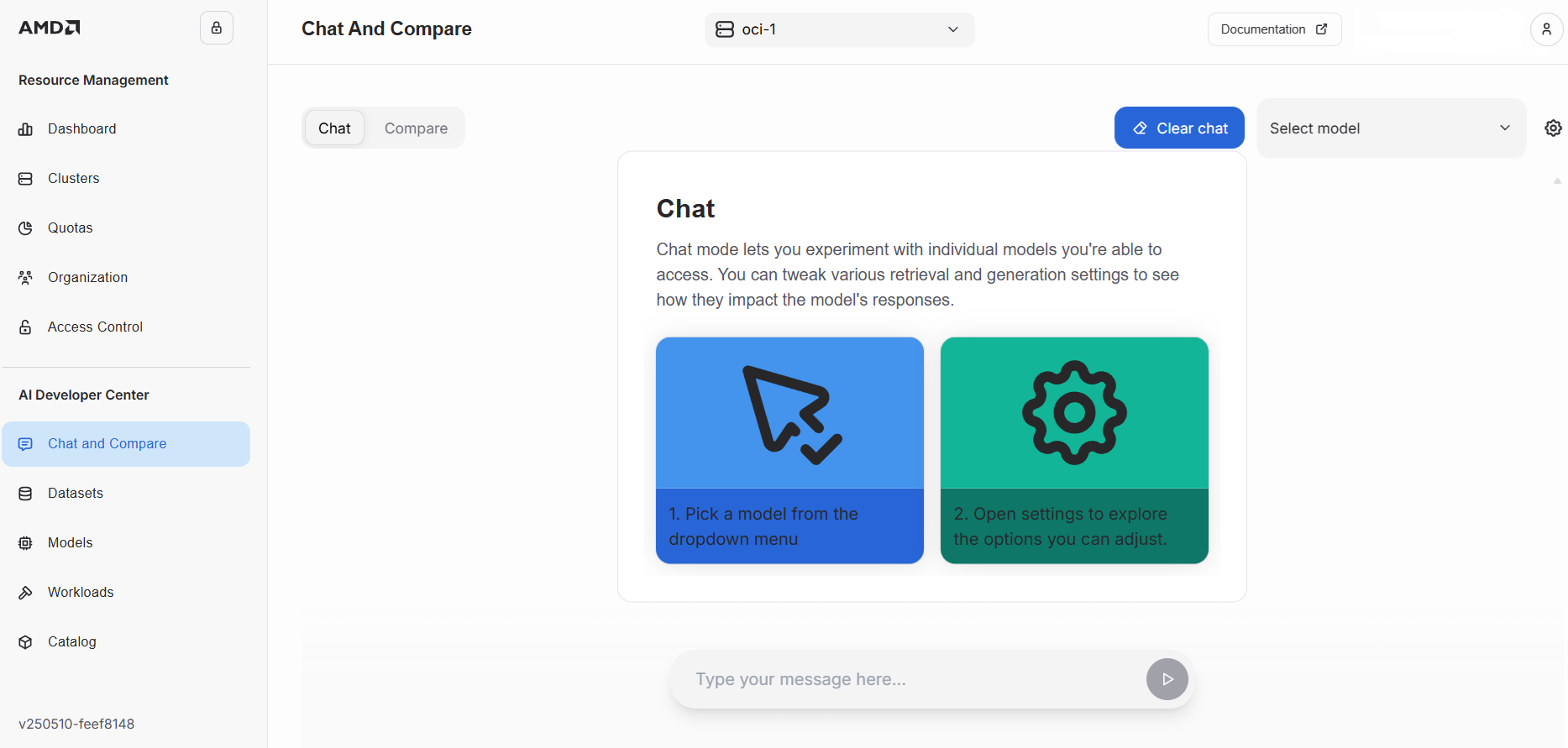

Chat and compare#

The chat page allows developers to experiment with models they have access to. Developers can modify generation parameters to see how they affect the model’s response. The model comparison view allows developers to compare the output of different models using the same settings.

GPU-as-a-Service#

The AMD AI Workbench provides developers with self-service access to workspaces with GPU resources. Platform admins can set project quotas for GPU usage so teams always have the right amount of resources available.

API Keys for Programmatic Access#

API keys provide secure programmatic access to deployed AI models and project resources. Developers can create API keys to integrate deployed models into applications, automate inference workflows, or access endpoints from external systems. API keys support configurable expiration times, renewal, and fine-grained access control by binding them to specific model deployments.

Running AI workloads on the command-line#

Developers can also deploy and run AI workloads through the command-line interface using kubectl. The AI workloads are pre-validated, open-source and continuously updated.

See more details on how to run workloads on the command-line.